Generative vs. Predictive AI

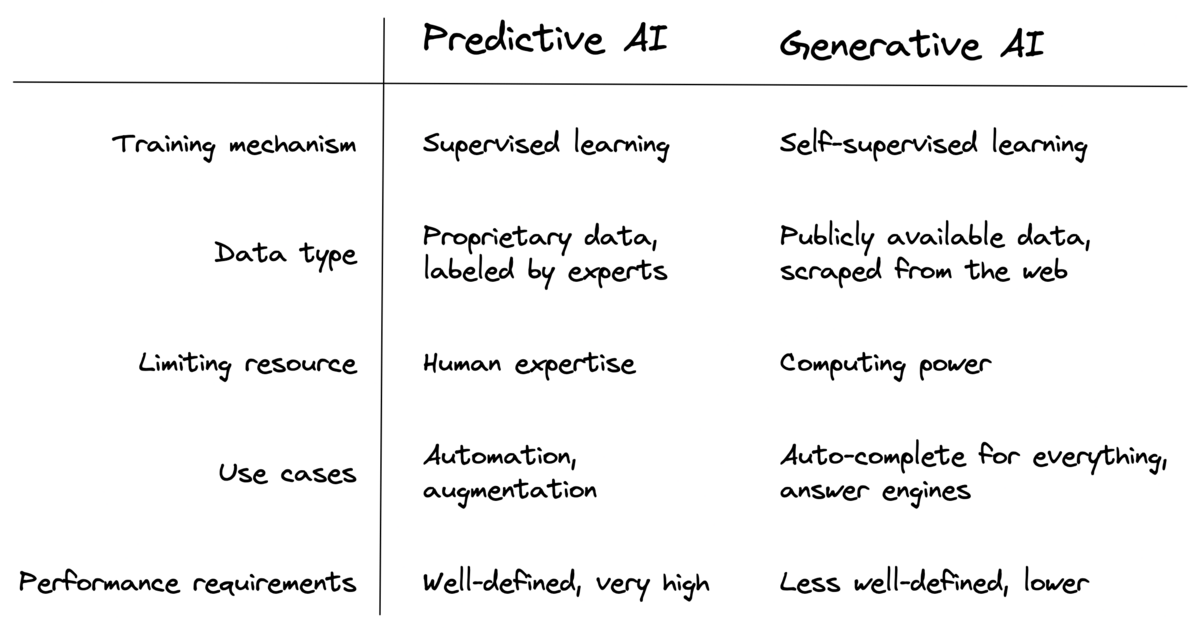

Comparing the two fundamental approaches to AI development

Everybody is talking about Generative AI these days.

Even relatives and friends who usually don't care about my job suddenly ask me about things like ChatGPT and Midjourney.

However, generative models can't solve all problems we typically address with AI.

This is where predictive AI comes in.

In this issue, I want to address the differences between these fundamental approaches, and why they are both needed.

Let's dive in.

Note: If you're an AI expert, this issue might not contain any new information. Feel free to skip this one, or stick around if you want to review some fundamentals.

Predictive AI: The Veteran

Predictive AI is concerned with labeling a data point.

The label can be a categorical (as in classification tasks) or continuous value (as in regression tasks), or a collection of these values (e.g., a bounding box consisting of four coordinates and an object category).

We typically train predictive AI systems using supervised learning.

That means we pass data points that were previously labeled by human experts to a machine learning algorithm with the task of detecting patterns.

These patterns are stored in the form of models (e.g., deep neural networks or decision trees) and can be used to make predictions on unseen data points.

Examples of predictive AI systems I've worked on in the past are:

Supporting lung cancer diagnosis by detecting tumors in chest CTs

Detecting defects as part of automated quality inspection in manufacturing lines

Fraud detection in credit card transactions

Sales and utilization forecasting for public transport

What do all of these use cases have in common?

They try to automate or augment a process that was previously handled by a human.

In these scenarios, we have well-defined and very high performance requirements.

For tumor diagnosis and quality inspection, these are usually in the 95%+ range for metrics like precision and recall.

This can only be achieved by collecting large amounts of data, carefully labeled by experts like physicians.

That's because these systems are very hard to build in practice.

While predictive AI research has been around for a long time, it took off with the beginning of the deep learning era in 2012.

The emergence of deep learning allowed a larger variety of problems to be tackled, especially those involving unstructured data like text and images.

Now, let's contrast this with generative AI.

Generative AI: The New Kid on the Block

Instead of labeling data points, generative AI is concerned with creating entirely new data points.

I know this sounds abstract. Let me illustrate this with recent examples:

ChatGPT generates text based on a given user query (usually also text-based)

Midjourney generates images based on a text-based user prompt

Whisper generates transcripts for audio files

In the past, these kinds of models were thought of as being harder to train than predictive AI systems.

Why?

Because the model needs to learn the underlying data distribution instead of focusing on the most important patterns (as in predictive AI).

Wait, but why are generative models so much more popular than their predictive counterparts?

Good question. To answer it, we have to look at two things: the training mechanism and applications of generative AI.

Let's start with the training mechanism.

Generative AI models are typically trained in a self-supervised fashion on publicly available data.

Take GPT-3 as an example, the underlying technology of the first ChatGPT version.

GPT-3 was trained by scraping all available text on the internet, and then training an autoregressive language model on this text corpus.

Autoregressive language models aim to predict the word (or word piece, to be precise) given the previous words.

Of course, I'm oversimplifying here, but you get the point.

This kind of training mechanism is much more scalable than supervised learning.

It has resulted in the creation of large-scale foundation models such as GPT-4 and Stable Diffusion which form the basis for most generative AI apps.

The primary limiting resource was (and still is) computing power.

Given the enormous progress and availability of accelerator chips, this resource is more available than human expertise (as required for predictive AI).

Now, let's look at the typical applications of generative AI.

As of today, we have two main use cases (read more in this post):

Autocomplete for everything (e.g., writing assistants, code auto-completion)

Answer engines (e.g., web search, chat-your-data apps)

What these use cases have in common is that they increase human productivity in tasks like writing, research, design, or programming.

These tasks are more subjective and typically have less well-defined performance requirements.

Thus, you can launch products with 80%+ accuracy levels because a human will always be in the loop.

Case in point: ChatGPT still hallucinates a lot of facts, but still achieved 100M users faster than applications before.

Bottom line: Why has Generative Overtaken Predictive AI?

To summarize, we have a technology that has a more readily available limiting factor (compute vs. human expertise) and lower performance requirements.

It's no wonder that generative AI achieved widespread adoption quicker than predictive AI.

Still, both approaches have their raison d'être for now.

As of today, predictive AI is more suitable for problems where proprietary data and deep human expertise are required.

As AI practitioners or executives, we need to understand when each approach is more suitable for solving a specific business problem.

What do you think about this topic? Please let me know by answering to this email or DM’ing me on LinkedIn or Twitter.

If you came across this article on my website, please consider subscribing to my newsletter to receive these kinds of insights straight in your inbox. You can find the archive of previous issues here.